Key Points

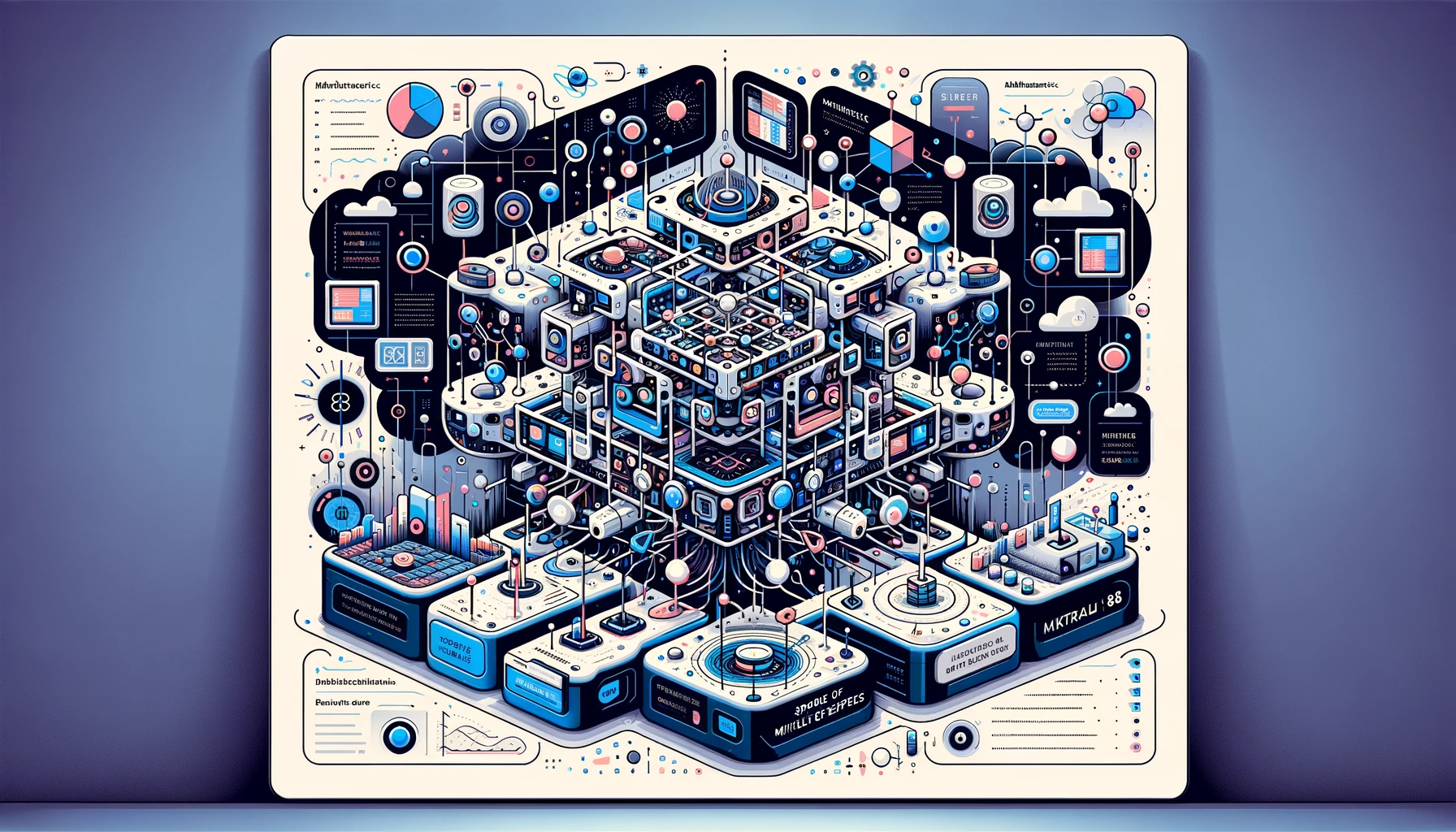

- Mixtral 8x7B is a Sparse Mixture of Experts (SMoE) language model that outperforms Llama 2 70B and GPT-3.5 on various benchmarks. It uses only 13B active parameters during inference.

- The model is pretrained with multilingual data using a context size of 32k tokens and demonstrates superior capabilities in mathematics, code generation, and multilingual understanding, significantly outperforming Llama 2 70B in these domains.

- Mixtral 8x7B – Instruct, a chat model fined-tuned to follow instructions, surpasses GPT-3.5 Turbo, Claude-2.1, Gemini Pro, and Llama 2 70B – chat model on human benchmarks, exhibiting reduced biases and a more balanced sentiment profile in benchmarks.

- Both the base and instruct models are open-sourced under the Apache 2.0 license, enabling broad accessibility and potential for diverse applications.

- The model architecture uses Mixture-of-Expert layers and demonstrates efficient computations and high performance on single GPUs with specialized kernels.

- Mixtral only uses 13B active parameters per token while outperforming previous models using 70B parameters per token, making it more efficient in cost-performance spectrum.

- Mixtral compares favorably to Llama models across a wide range of benchmarks, exhibiting superior performance in code and mathematics while using 5x fewer active parameters during inference.

- Mixtral significantly outperforms Llama 2 70B in French, German, Spanish, and Italian on multilingual benchmarks.

- The expert selection by the router does not show specific specialization to certain domains, suggesting that the router exhibits structured syntactic behavior. The model exhibits some degree of positional locality in The Pile datasets.

Summary

The paper introduces Mixtral 8x7B, a sparse mixture of experts model that outperforms Llama 2 70B and GPT-3.5 on various benchmarks. The model uses a subset of parameters for each token, allowing faster inference speed at low batch-sizes and higher throughput at large batch-sizes. Mixtral excels in mathematics, code generation, multilingual understanding, and information retrieval from a context window of 32k tokens.

The paper also introduces Mixtral 8x7B -Instruct, a chat model fine-tuned to follow instructions, demonstrating superior performance compared to other chat models. Both models are released under the Apache 2.0 license for academic and commercial usage, and changes have been contributed to the vLLM project for open-source usage. The paper provides insights into the model architecture and performance comparisons with Llama 2 and GPT-3.5. Additionally, it presents Mixtral's capabilities in multilingual understanding and long-context tasks, such as passkey retrieval. The document also discusses the assessment of biases and sentiment in the models, as well as the efficient expert selection process.

Overall, the paper highlights Mixtral's advancements and contributions to the field of language modeling and its broad accessibility for diverse applications.

Reference: https://arxiv.org/abs/2401.04088