Key Points

1. The paper presents a novel Multi-disciplinary Collaboration (MC) framework for the medical domain, leveraging large language models (LLMs) in a collaborative multi-round discussion to enhance LLM proficiency and reasoning capabilities.

2. The framework is designed to address challenges faced by LLMs in the medical domain, such as limited and specialized training data, and the need for extensive domain knowledge and reasoning abilities.

3. The MC framework consists of five critical steps: gathering domain experts, proposing individual analyses, summarizing these analyses into a report, iterating over discussions until a consensus is reached, and ultimately making a decision.

4. Experimental results on nine datasets demonstrate that the MC framework outperforms zero-shot baselines and achieves comparable performance with the strong few-shot baseline with self-consistency.

5. Human evaluations on error cases revealed common categories of errors, including lack of domain knowledge, mis-retrieval of domain knowledge, consistency errors, and chain-of-thought errors.

6. The paper highlights the need for future studies to further improve the MC framework by addressing the identified error categories and strengthening the model’s proficiency and reliability.

7. The proposed approach aims to mine and harness medical expertise in LLMs and enhance their reasoning competence in a training-free and interpretable manner.

8. The experimental results focus on benchmark datasets such as MedQA, MedMCQA, PubMedQA, and six subtasks from MMLU, showcasing the effectiveness of the MC framework in real-world application scenarios.

9. The study emphasizes the potential benefits of the MC framework in enhancing LLM proficiency and reliability in addressing clinical inquiries that demand intricate medical expertise and reasoning abilities.

Summary

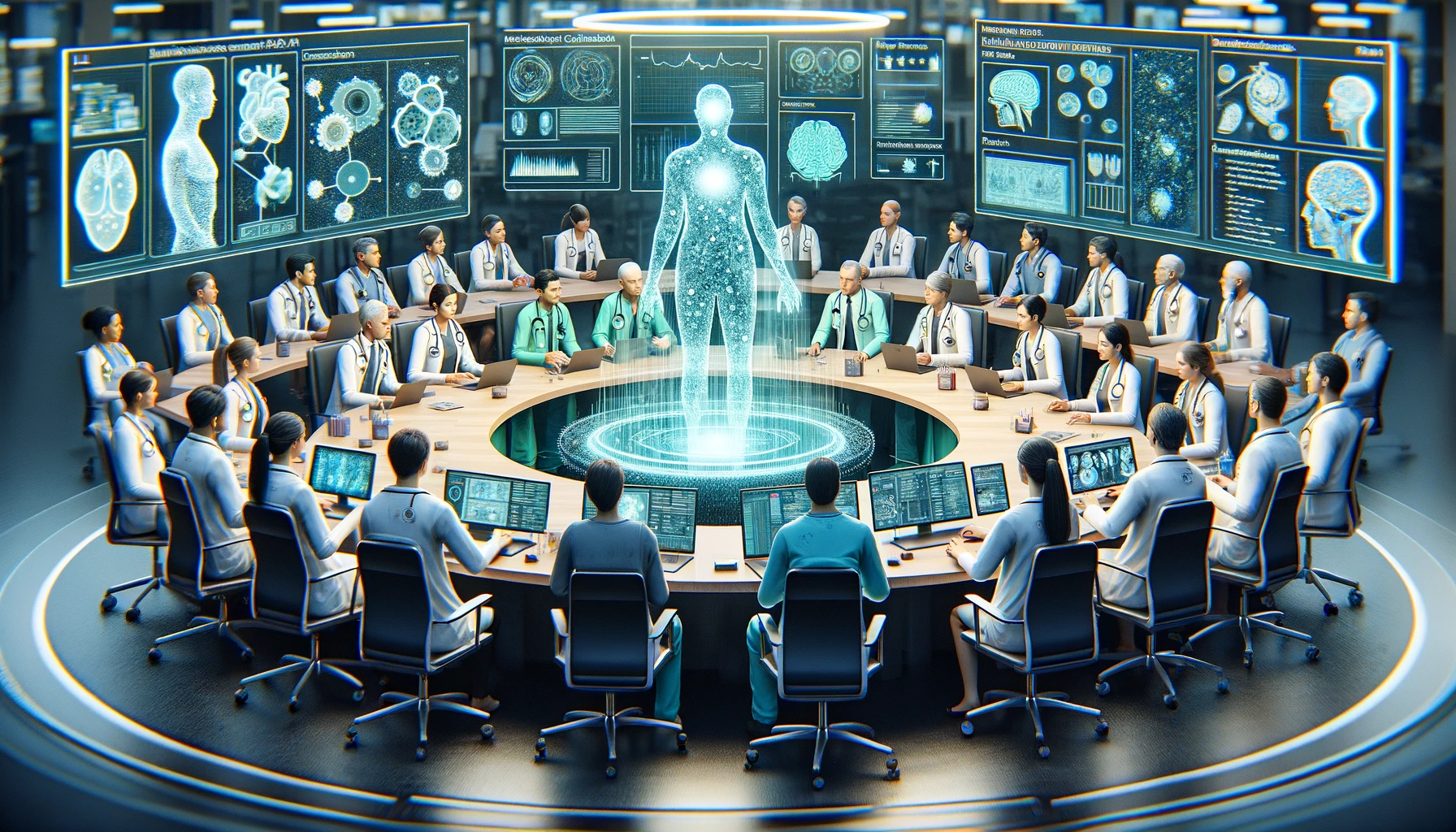

Proposed MC Framework for Addressing Challenges Faced by Large Language Models in the Medical Field

The proposed research paper presents a novel Multi-disciplinary Collaboration (MC) framework designed to address the challenges faced by large language models (LLMs) in the medical field. The paper highlights the unique obstacles LLMs encounter in the medical domain, such as limited and specific training data, lack of domain knowledge, and reasoning abilities. The MC framework leverages role-playing LLM-based agents who engage in a collaborative multi-round discussion, aiming to enhance LLM proficiency and reasoning capabilities in a training-free and interpretable manner. The framework comprises five critical steps: gathering domain experts, proposing individual analyses, summarizing these analyses into a report, iterating over discussions until a consensus is reached, and ultimately making a decision.

Evaluation of the Proposed MC Framework and Experimental Results

Experimental results demonstrate the superior performance of the proposed MC framework in zero-shot settings compared to strong baselines. The study evaluates the impact of the number of collaborating agents on the overall performance and conducts human evaluations to identify and categorize common error types in the approach. The research sheds light on future directions to mitigate the identified drawbacks and strengthen the model's proficiency and reliability.

In conclusion, the paper presents a multi-disciplinary collaboration framework for question-answering tasks in the medical domain, demonstrating its effectiveness in leveraging medical expertise in LLMs and enhancing their reasoning competence. The study also identifies and categorizes common error types through rigorous human evaluation, providing valuable insights for future research and improvements in the framework.

Reference: https://arxiv.org/abs/2311.10537