Key Points

1. Training state-of-the-art deep neural networks is computationally expensive, and one way to reduce training time is to normalize the activities of the neurons.

2. Batch normalization significantly reduces training time in feedforward neural networks but is dependent on mini-batch size and is not easily applied to recurrent neural networks.

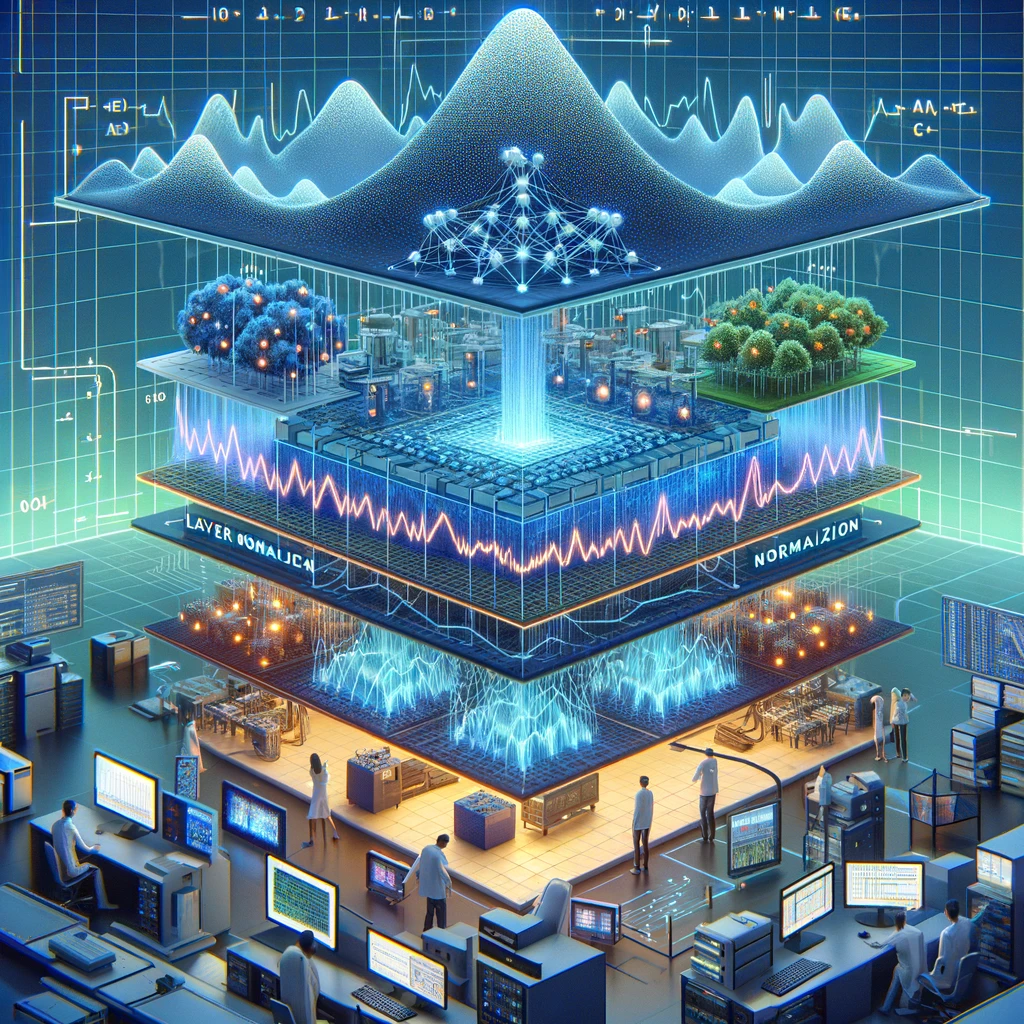

3. Layer normalization is introduced as a method to compute the mean and variance used for normalization from all summed inputs to the neurons in a layer on a single training case.

4. Layer normalization reduces training time and stabilizes the hidden state dynamics in recurrent networks, showing substantial improvement over previously published techniques.

5. Layer normalization is applicable to recurrent networks by computing the normalization statistics separately at each time step and performs the same computation at training and test times.

6. Layer normalization also improves the generalization performance of several existing RNN models but does not impose constraints on the size of a mini-batch and can be used in the pure online regime with batch size 1.

7. Comparisons are made between layer normalization, batch normalization, and weight normalization within different invariance properties under normalization methods.

8. Experiments show that layer normalization offers a per-iteration speedup across various metrics for image retrieval tasks and converges faster to a better validation result over the baseline and batch normalization variants when applied to question-answering models.

9. Layer normalization also contributes to improved performance on downstream tasks and handwriting sequence generation tasks, further demonstrating its potential for speeding up training.

Summary

The paper introduces the concept of layer normalization as a method to speed up the training of neural networks and compares it with batch normalization and weight normalization. It describes how layer normalization is applied to various experiments, such as recurrent neural networks (RNNs), feed-forward networks, convolutional neural networks, and generative modeling on the MNIST dataset. The study demonstrates that layer normalization can substantially reduce the training time compared to previous techniques and improve the generalization performance of several existing RNN models. It further explains the invariance properties of layer normalization compared to batch normalization and weight normalization, specifically its ability to stabilize hidden state dynamics in RNNs, and its effectiveness in dealing with long sequences and small mini-batches.

Additionally, the paper presents empirical evidence from experiments in various domains, such as image-sentence ranking, question-answering, NLP tasks, and generative modeling, showing that layer normalization offers per-iteration speedup, faster convergence, and improved generalization over the baseline models. The experiments also include comparison with recurrent batch normalization, demonstrating that layer normalization not only trains faster but also converges to better validation results.

The study provides a comprehensive evaluation of layer normalization across different tasks and highlights its potential to significantly improve the training of neural networks.

Reference: https://arxiv.org/abs/1607.06450